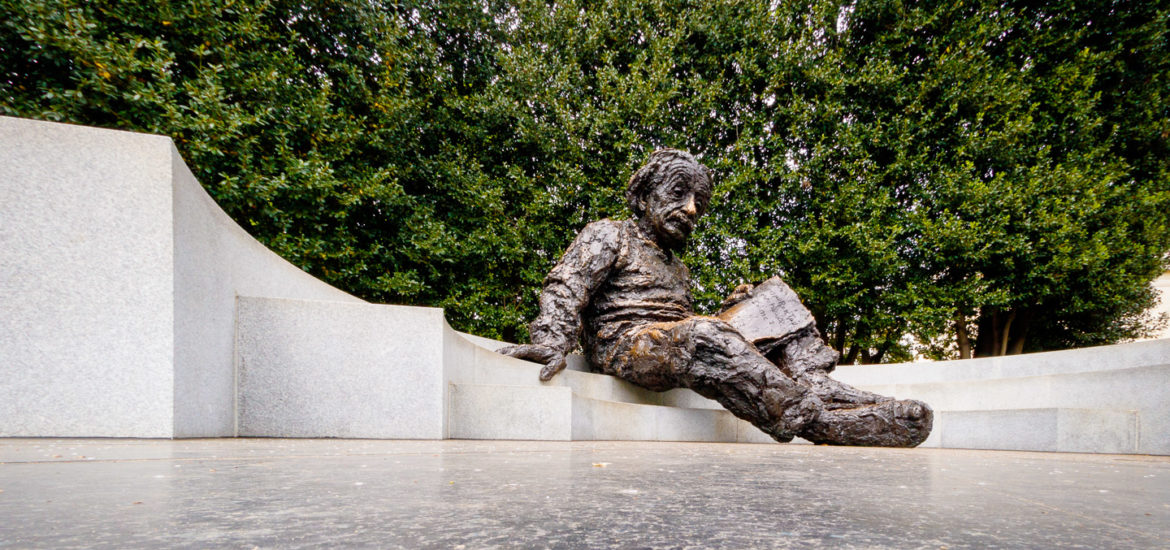

— The Einstein Memorial – National Academy of Sciences, 2101 Constitution Ave NW, Washington, DC 20418

“New uses of speech technologies are changing the way people interact with companies, devices, and each other. Speech frees users from keyboards and tiny screens and enables valuable, effective interactions in a variety of contexts.”

Clearly focused, SpeechTek 2017 was intended for anyone wanting to learn about deploying speech technology for business applications. This fast moving three day event, was organized in four simultaneous tracks. Content was featuring keynote presentations, panel discussions, technical presentations, hands-on workshops, case studies, and more:

- CONVERSATIONAL INTERFACES

- DIGITAL TRANSFORMATION

- VIRTUAL ASSISTANTS

- NEXT BIG THING

Gerry McGovern, Founder & CEO, Customer Carewords, keynoted the 1st day of the conference, which is traditionally a very inspiring event. Gerry didn’t disappoint and some thought provoking quotes are still hounding me a week later: “Why are so many more people in our organizations are allowed to add content to web sites, than those who can delete? Why did some web sites more then double the number of page-views, after removing half the content, most of which had not been viewed ever.”

–Crispin Reedy

Sumeet Vij, Chief Technologist at Booz Allen Hamilton talked about how to enhance virtual assistant intelligence with search, recommendation, and semantic technologies; and how their deep search implementation would extract meaning, by considering related words. Sumeet recommend to take a look at the 2nd edition of Booz Allen Hamilton’s FIELD GUIDE TO DATA SCIENCE.

Other resources mentioned:

- DEXi: Open-source project for Multi-Attribute Decision Making

- Elasticsearch: RESTful search and analytics engine

Leslie Spring, CEO and Founder of Cognitive Code, a privately-held company that develops conversational artificial intelligence systems, based on its Silvia technologies. Leslie promoted the idea to perform speech recognition on device, to reduce data transmission and compute cost and also to address privacy concerns, for instance when speech recognition is used with toys.

To have an engaging interaction with a voice user interface does not necessarily require an avatar, a disembodied voice can still be very engaging. But here is a demo of the SILVIA Avatar:

No matter how often you hear Dr. William Meisel (President, TMA Associates and Editor of LUI News) speak, there are always some new ideas. Bill stated that at least for the near term, company specific digital assistants will have to stay extremely focused, within the company’s context [domain]. The quality of speech recognition has become so good that he considered it “done” and the industry’s focus, has now shifted to NLU. Specifically in areas like customer-support and help, where he mentioned the following channels (Web, Mobile, SMS, PC-apps, IVR, new devices like Echo and Google Home) he recommended to not develop directly for a channel, but building an abstraction layer or APIs.

Bill mentioned the concept of a “SwichAgent”, i.e., a human that takes over from a bot, but without letting the customer know that he his now served by a human.

In Bills option, Nuance and to some extend Google, have most likely an advantage developing interaction models, due to their previous exposure to certain industries and domains (Nuance in banking and finance for example).

Nandini Stocker, Conversation Design Lead at Google, reminded us once again that there is no fast forward in a Voice User Interface, that flowchart for larger VUI break down quickly and also very often do not reflect what a real conversion sounds like.

[pdf-embedder url=”//wolfpaulus.com/wp-content/uploads/2017/05/design-principles-quick-reference.pdf”] Resources mentioned:- //developers.google.com/actions/design/

- http://avixd.org The Association for Voice Interaction Design

- http://discourse.ai (Susan Hura’s new company)

Some quotes that were mentioned in Crispin Reedy, Senior VUI Design Consultant at Versay, Sunrise discussions:

“Just this week, Facebook said it was “refocusing” its use of AI after its bots hit a failure rate of 70 percent, meaning bots could only get to 30 percent of requests without some sort of human intervention.”

“I would call it overpromising. Brands that created bots with a structured request or utility like Domino’s or in retail were easy. But bots that tried to break out of the utility and be chatbots became the problem.”

— Joe Corr, Creative Tech Director, who built the Domino’s bot.

Dan Miller (Lead Analyst-Founder at Opus Research) moderated the keynote panel with Dr. William Meisel, President TMA Associates and Editor of LUI News, Jay Wilpon, SVP, Natural Language Research, Interactions Brian Garr, COO at Cognitive Code.

As many before him, Jay emphasized to strictly focus on a narrow domain, and doing so not only for the NLU, but speech recognition as well. After some success in SR and NLU, the next area has to be NLGeneration and Sentence Planning. Brian, said there is no Uncanny Valley in the Text to Speech. mentioned how much opportunity is being lost, by trying to minimize the duration of IVR calls, instead of engaging with the customer. Bill Meisel: “The closest thing to plugging a wire into your brain, is using spoken language.”

Michael McTear, Professor, Ulster University, asked in his presentation: What Is So New About Chatbots? Haven’t we seen it all before?

“Most chatbots disappoint. Outside of narrow applications, current chatbots are hard to use and frustrate customers with outright usability failures like not setting expectations or acting in unexpected ways. With the hype around bots, everyone is running around like headless chickens to build bots that will solve every existing problem in the world.”

“Tools make it easy to create dialogs but do not guarantee good dialog design”

“What we fail to realize is that underlying technologies like A.I./NLP (natural language processing) are not advanced enough to give us that natural conversational experience for everything, and we often end up with setting wrong expectations ”

Takeaways from Mike’s session:

- Don’t ignore lessons from the past

- Get involved in current initiatives in the Speech community

- AVIxD: Association for Voice Interaction Design

- W3C community: Standards for Virtual Agents

- Conversation may be natural but that doesn’t make it easy

Dan Miller (Lead Analyst-Founder at Opus Research) talked in his own session about Voice Services in the World of Bots. He stressed the importance of a single “truth” when communication through multiple “channels”.

Speech Technology and chatbots seem to hit a nerve more easily than other UX technologies. I have never heard lots of complains about the many poorly designed Websites out there. We simply used to make fun of companies that would had animated GIFs on their sites. Also, I don’t think there is too much emotional energy in discussions where we complain about the many poorly engineered and designed mobile apps, we simply don’t install or uninstall them. This seems to be different with voice and chat apps. The emotional energy, with which people complain about poorly behaving chatbots and for instance Alexa Skills is quite remarkable.

Conversational UI is a Minefield

With VR/AR user interfaces, we leave behind the 2D communication of the mouse pad as we move toward saying exactly what we mean or want. Once used to “talking” with Siri or Alexa, users tend to use voice elsewhere. Chatbots seem to be a transitional step to a future where we “talk” to services. This presentation focuses on voice vs. chat user interfaces and introduces attributes of good chatbot user interfaces.

SpeechTEK 2017 was a great success. This long running conference attracted many 1st time attendees, which in my opinion and by my observation didn’t get disappointed. The quality of the presentations was mostly great sometimes outstanding and I’m already looking forward to SpeechTEK 2018: April 9 – 11, 2018. Renaissance Washington, DC Hotel, Washington, DC.