The Applied Voice Input Output Society (AVIOS) and TMA Associates organize the annual Mobile Voice Conference, which this year took place at the Westin in San Jose, California on April 11 and 12.

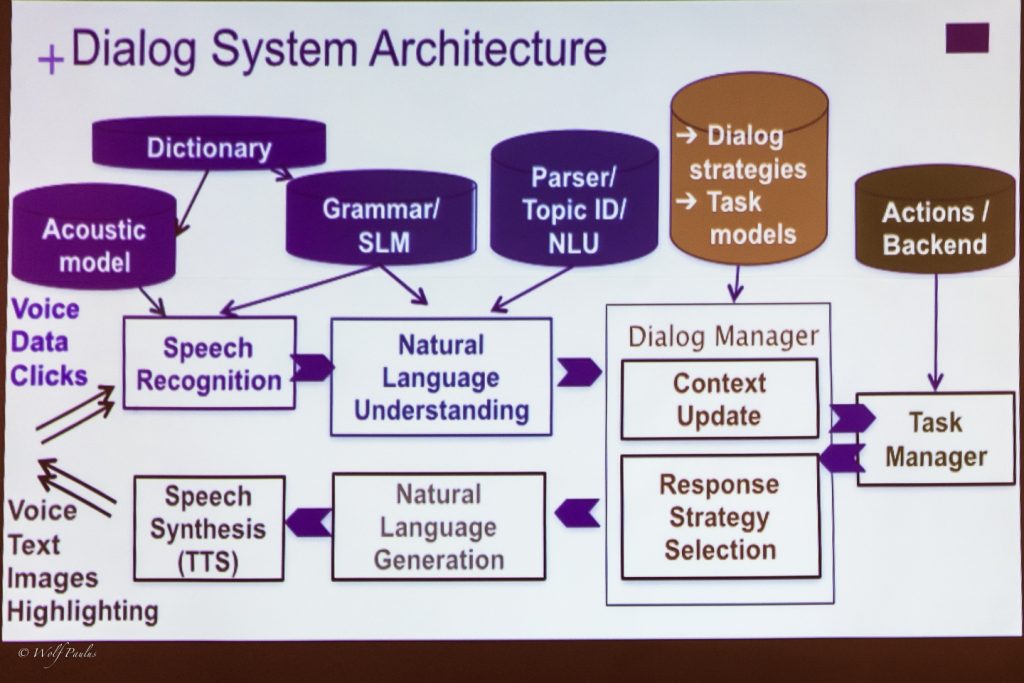

Recognizing that speech recognition, speech synthesis, as well as language interpretation has matured, the Mobile Voice Conference 2016 focused on Language User Interfaces and explored trends towards Conversational User Interfaces.

While WIMP (Windows Icons Mouse and Pointer) based user interfaces are getting complexer and more complicated (e.g. force-touch), a language user interface (using text or voice input) can often deliver results more conveniently, i.e., easier and faster. Prominent examples of such language user interfaces are Siri, Cortana, GoogleNow, or Alexa and while promising, their rule based (verses learning) nature exposes their limits rather quickly.

While language user interfaces cover both voice and text input, having a user’s voice input allows the detection of his emotional state. Moreover, voice input also allows at least for personalization if not for authentication (voice biometrics), which currently would still require 10 to 15 seconds long voice recording.

AVIOS President Bill Scholz, kicking off the Mobile Voice Conference 2016 with a keynote panel:

- Nick Landry, Senior Techical Evangelist at Microsoft

- Todd Mozer, CEO Sensory

- Jay Wilpon, SVP Natural Language Research at Interactions

Edgar Kalns, Director of Platform, SRI International emphasized the importance and connection of the Internet of Things and Voice User Interfaces. In his view, the future of user interfaces is conversational, observers and reacts to behavioral cues, is adaptive and is of course multi modal. With regards to conversational user interfaces, Edgar recommended to look into opensource Natural Language Generation (NLG) solutions.

Dr. Kathy Brown, Chief Data Scientist and Vice President of Speech Sciences at [24]7 mentioned that hardly anyone can express all required entities (what the AlexaSkillKit calls ‘slots’) in one turn. A phenomenon we experienced when asking QuickBooks users to voice-input receipt data ..

In one of the shortest, but also one of the best talks of the conference, Jeanine Heck, Executive Director at Comcast, talked about the six million voice-enabled remote controls, Comcast has rolled out with the Xfinity X1 set-top box. When I met Jeanine three years ago, voice was just a small side project for her, but has now turned into a full time job. A small team started with building the language model and a remote-controll app, rolled-out on iOS and Android, helped to collect data to improve the model. “That’s how we leaned how people wanted to talk to their TVs”. Jeanine recommended to log and measure everything and that even now, after having over six million voice-enabled remotes in users’ hands, they would still regularly, manually listen in. Her advice: Solve a problem, do that one thing right, and try to be unique.

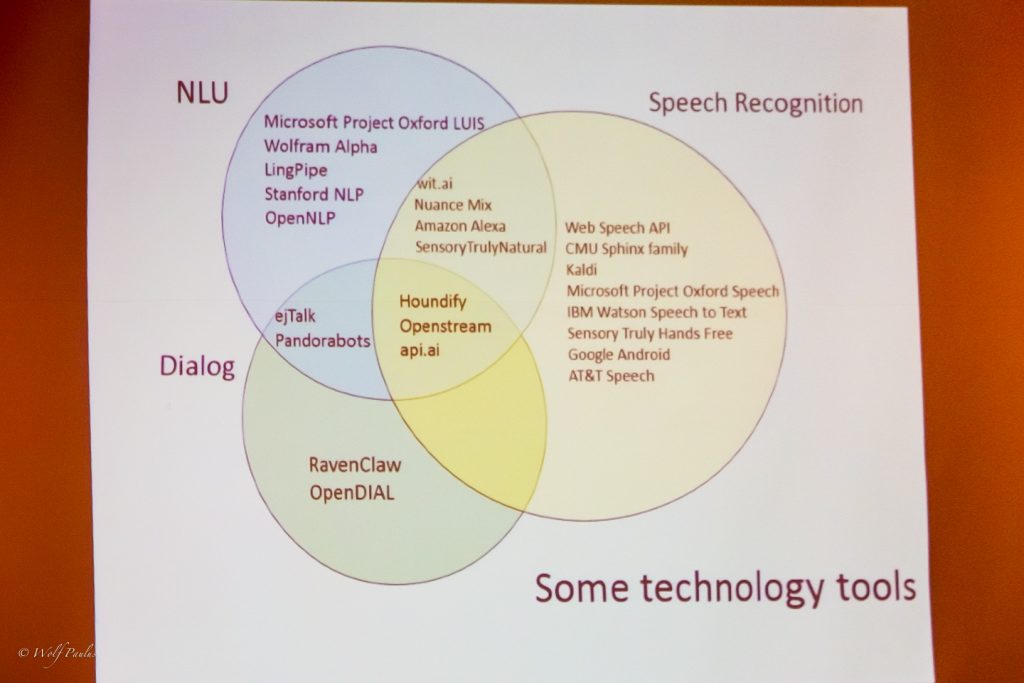

Deborah Dahl, Principal, Conversational Technologies, always one of my favorite speakers, provided unbiased information you can trust and put to work immediately. Here she is segmenting the space into NLU, Speech Recognition, and Dialog-Management tools.

If I had the chance to go back to College, I would probably try to catch a few of her lectures. I’m talking about Dr. Marie Meteer, an Associate Professor in Computer Science and Computational Linguistics at Brandeis University, where she teaches courses like speech recognition and mobile application development.

William Meisel, President, TMA Associates, as always busy moderating several panels and giving several talks during the two day conference. He recently experimented with Microsoft’s

Language Understanding Intelligent Service https://www.luis.ai/ and stated that we are experiencing a fundament shift in user interfaces. Bill said, “the Amazon Echo is the closest thing to Science Fiction .. much friendlier and almost social. It’s much faster and doesn’t drop you into a web page, the first instance it doesn’t have an answer.”

This was my 4th attendance in as many years and for the 3rd time, I had the opportunity to speak at this limited-attendance event. As always, it was not uncontroversial, just as it should be: Patterns for Natural Language Applications – beyond declaring User Intents.